New Dedicated AWS Cloud Control Provider Released

We've released a new dedicated StackQL AWS Cloud Control provider, providing full CRUDL operations across AWS services via the Cloud Control API including purpose-built resource definitions leveraging Cloud Control's consistent schema.

Resource Naming Convention

Resources follow a clear pattern to differentiate operations:

| Resource Pattern | Operations | Use Case |

|---|---|---|

{resource} (e.g., s3.buckets) | SELECT, INSERT, UPDATE, DELETE | Full CRUD with complete resource properties |

{resource}_list_only (e.g., s3.buckets_list_only) | SELECT | Fast enumeration of resource identifiers |

This separation means listing thousands of resources won't trigger rate limits from individual GET calls:

-- Fast enumeration (list operation only)

SELECT bucket_name

FROM awscc.s3.buckets_list_only

WHERE region = 'us-east-1';

-- Full resource details (get operation)

SELECT *

FROM awscc.s3.buckets

WHERE region = 'us-east-1'

AND data__Identifier = 'my-bucket';

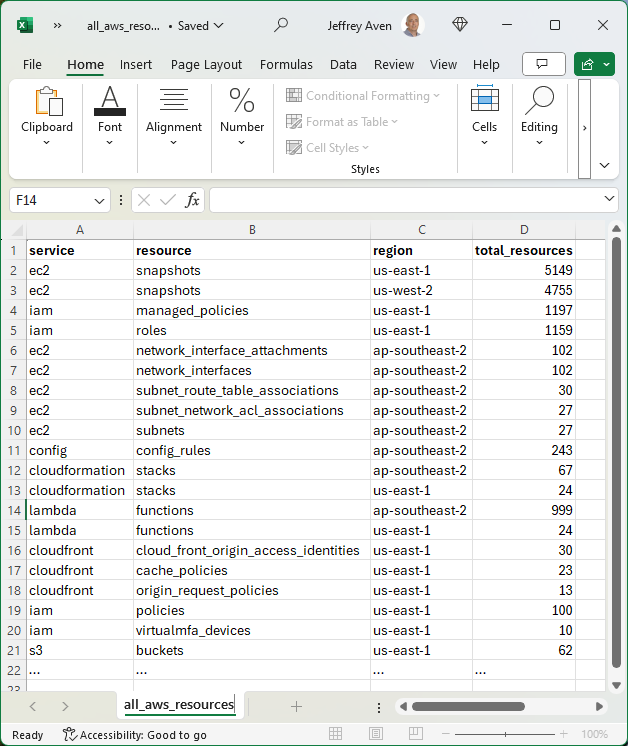

Provider Coverage

The awscc provider includes:

- 237 services and 2371 resources covering the breadth of AWS

- Full CRUDL support for all Cloud Control compatible resources

- Consistent schema derived from AWS CloudFormation resource specifications

Example Operations

Create an S3 Bucket

INSERT INTO awscc.s3.buckets (

BucketName,

region

)

SELECT

'my-new-bucket',

'us-east-1';

Query EC2 Instances

SELECT

instance_id,

instance_type,

tags

FROM awscc.ec2.instances

WHERE region = 'ap-southeast-2'

AND data__Identifier = 'i-1234567890abcdef0';

Delete a Resource

DELETE FROM awscc.lambda.functions

WHERE data__Identifier = 'my-function'

AND region = 'us-east-1';

Enhanced Documentation

The provider documentation at awscc.stackql.io now features:

- Interactive schema explorer with expandable nested property trees

- Complete field documentation including complex object structures

- Ready-to-use SQL examples for

SELECT,INSERT, andDELETEoperations - IAM permissions reference for each resource operation

Get Started

Pull the new provider:

stackql registry pull awscc

Query your AWS resources:

stackql shell

>> SELECT region, bucket_name FROM awscc.s3.buckets_list_only WHERE region = 'us-east-1';

Let us know your thoughts! Visit us and give us a star on GitHub.